Choose your file format and encoding wisely

I’m working with memoQ (CAT tool) for more than four years now. It’s quite good comparing to other localisation tools. Which isn’t maybe such a great achievement as there aren’t too many. But anyway, it’s decent.

But memoQ team has made some weird design decisions early on. The most annoying one is the choice of encoding. Entire world uses UTF-8 which is great and everything works fine with it. However memoQ decided they’ll use UTF-16LE and it’s a nightmare. When you’re exporting Translation Memory to TMX file and one of the languages isn’t Asian or Middle East kind then the file will be twice as big as it would be if UTF-8 has been used. I know, because I’ve had to develop a solution to convert our exports to UTF-8 to preserve some storage space and ease the transfer.

Moreover you can’t turn on compression while transferring files via their API. TMX is basically XML, so text, and it compresses beautifully. Right now we have more than 4 GB worth of TMXs (after conversion to UTF-8) which compresses to just ~420 MB. Yep, it’s that good. But each week I need to transfer ~7 GB of data (don’t forget they’re using UTF-16LE on server) because there’s no option to compress before transfer. At least I can upload back to the server the TMX which is UTF-8 encoded.

And they’re using UTF-16LE for absolutely everything. Recently I’ve had to create an app which converts Trados analysis to memoQ compatible one, so we could use it in Plunet (TMS). I had to create a CSV file and of course it needs to be encoded in UTF-16LE. What’s funny is that they’ve designed their CSVs to be human rather than machine readable, but for instance Excel won’t open such encoded CSV correctly and I don’t know regular user who uses anything else to open this type of files.

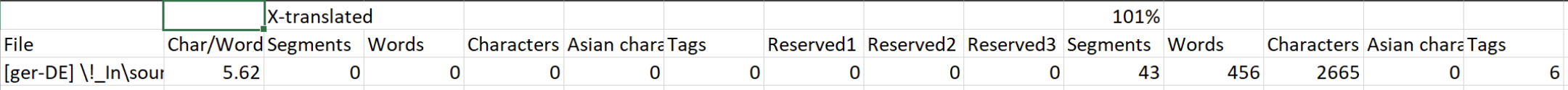

Just look at it:

,,X-translated,,,,,,,,101%,,,,,,,,Repetitions,,,,,,,,100%,,,,,,,,95% - 99%,,,,,,,,85% - 94%,,,,,,,,75% - 84%,,,,,,,,50% - 74%,,,,,,,,No match,,,,,,,,Fragments,,,,,,,,Total,,,,,,,,

File,Char/Word,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,Segments,Words,Characters,Asian characters,Tags,Reserved1,Reserved2,Reserved3,

"[ger-DE] \!_In\source\en\378499.xml",5.62,0,0,0,0,0,0,0,0,43,456,2665,0,6,0,0,0,9,138,816,0,0,0,0,0,114,242,1356,0,261,0,0,0,2,21,115,0,0,0,0,0,4,92,524,0,3,0,0,0,0,0,0,0,0,0,0,0,14,206,1263,0,0,0,0,0,51,1167,6307,0,0,0,0,0,0,0,0,0,0,0,0,0,237,2322,13046,0,270,0,0,0,

I can’t sanely read it using any serialiser known to me. I would’ve to get rid of the first line first and then map each field to my object via index rather than field name, as field name isn’t distinctive. But, if you’ll convert the file to UTF-8 first, it looks nice in Excel:

And I believe it was the only reason they’ve designed it this way. Because I don’t presume they’ve intentionally wanted to make my life hard by making their output files non-machine readable. Moreover, if you’ve paid attention, there’s 90 commas in each line, but there’s also 90 fields. If you know anything about CSV you know that comma separates values (fields), so the last comma is redundant. Yeah, how they’re handling this format drives me crazy. Moreover, however it may be Plunet’s thing, I’m not able to tell, value in the first field (File) needs to be between quotes, always, even if according to CSV specification it doesn’t always have to be. memoQ has 3 other export formats (2 CSVs and HTML), but they’re all horrible.

Trados isn’t any better. Its CSVs are equally, if not more, machine unreadable. Their XML is a bit more sane and it’s what I’m using as an input for my conversion.

All of the above is just my perception of things. I don’t know what was memoQ’s basis when making such design decisions. Maybe it makes sense for majority of their customers. Or at least has made sense in the past and now they’re just left with this legacy which is hard to get rid of as it’s so deep in their system. No clue. But treat it as a warning and go with the simplest solutions in your design, don’t overcomplicate things.

UPDATE:

And of course the next day after I’ve published this I’ve discovered yet another annoying thing. Access to memoQ WS Service is granted by external IP. You add your IP via their GUI and you have access. It’s possible to grant access also using API key, but it’s not supported in the version of Plunet we’re currently using. And Plunet must be connected to memoQ. OK, so back to memoQ and its configuration. We don’t have static external IP, but we have been assigned the whole subnet. Great! However it’s not possible to enable access to WS Service for the subnet. Not great. Moreover GUI for adding IP addresses looks like below. Which means we would have to add 254 addresses by manually copy/pasting them and each address needs to be pasted by each of 4 octects separately. Which is nightmare:(